Grasping AI: Its Limitations and Responsibilities

Artificial Intelligence (AI) has become a powerful tool in many aspects of daily life, from virtual assistants like Siri and Alexa to advanced chatbots like ChatGPT. However, Sundar Pichai, CEO of Alphabet, Google's parent company, recently emphasized the importance of not "blindly trusting" AI outputs. He pointed out that despite advancements, AI models are still "prone to errors," highlighting the necessity of maintaining a diverse information ecosystem. This is an essential conversation, especially as we increasingly rely on AI for critical information and decision-making.

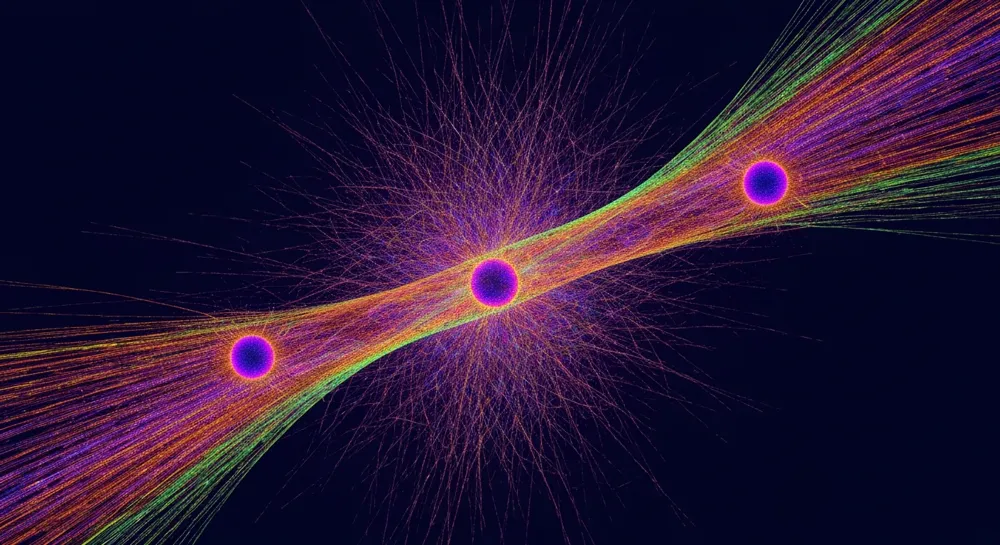

AI operates based on complex algorithms that analyze vast amounts of data, deriving patterns and making predictions. For example, when using a chatbot to gather information about a medical condition, one might expect accurate answers based on data inputs. Yet, Pichai warns that such reliance can be misleading. The company itself has faced backlash for inaccuracies in AI-generated content when integrating AI Overviews into search functions. This risk is underscored by experts who note the potential dangers of using AI for sensitive inquiries, echoing the sentiment that while AI can enhance creative processes, one must verify its outputs, particularly in critical domains like health and news.

In closing, while AI technologies like Google’s Gemini 3.0 aim to improve both user experience and information accuracy, the responsibility ultimately lies with the users to critically assess the credibility of these tools. As AI continues its rapid evolution, staying informed and questioning AI outputs becomes crucial. Interested readers can explore resources like Google's AI blog or follow developments in AI research to deepen their understanding of how these technologies affect our interactions and information access.

Read These Next

China Launches Robotic Inspection Technology for Freight Trains

China has launched intelligent inspection robots for freight trains at Huanghua Port, inspecting 10 trains daily with 100% fault recognition.

China Announces New Green Targets for Data Centers

China launches action plan for green data centers, aiming to boost energy efficiency and renewable energy use by 2025.

Norway Launches World's First CO2 Storage Service

Norway is set to launch the world's first commercial CO2 storage service, the Northern Lights project, to reduce emissions.